The rise of conversational artificial intelligence has transformed how companies interact with customers and optimize operations. Among these technologies, ChatGPT has garnered growing interest. It might seem like simply plugging in the ChatGPT API is enough to get a powerful, ready-to-use AI. But the reality is more complex. Discover the complete guide to integrating ChatGPT into a custom-built solution.

In this guide:

- Understanding ChatGPT terminology

- Benefits of integrating ChatGPT

- Customizing your integration

- Key steps for a successful ChatGPT integration

Understanding ChatGPT Terminology

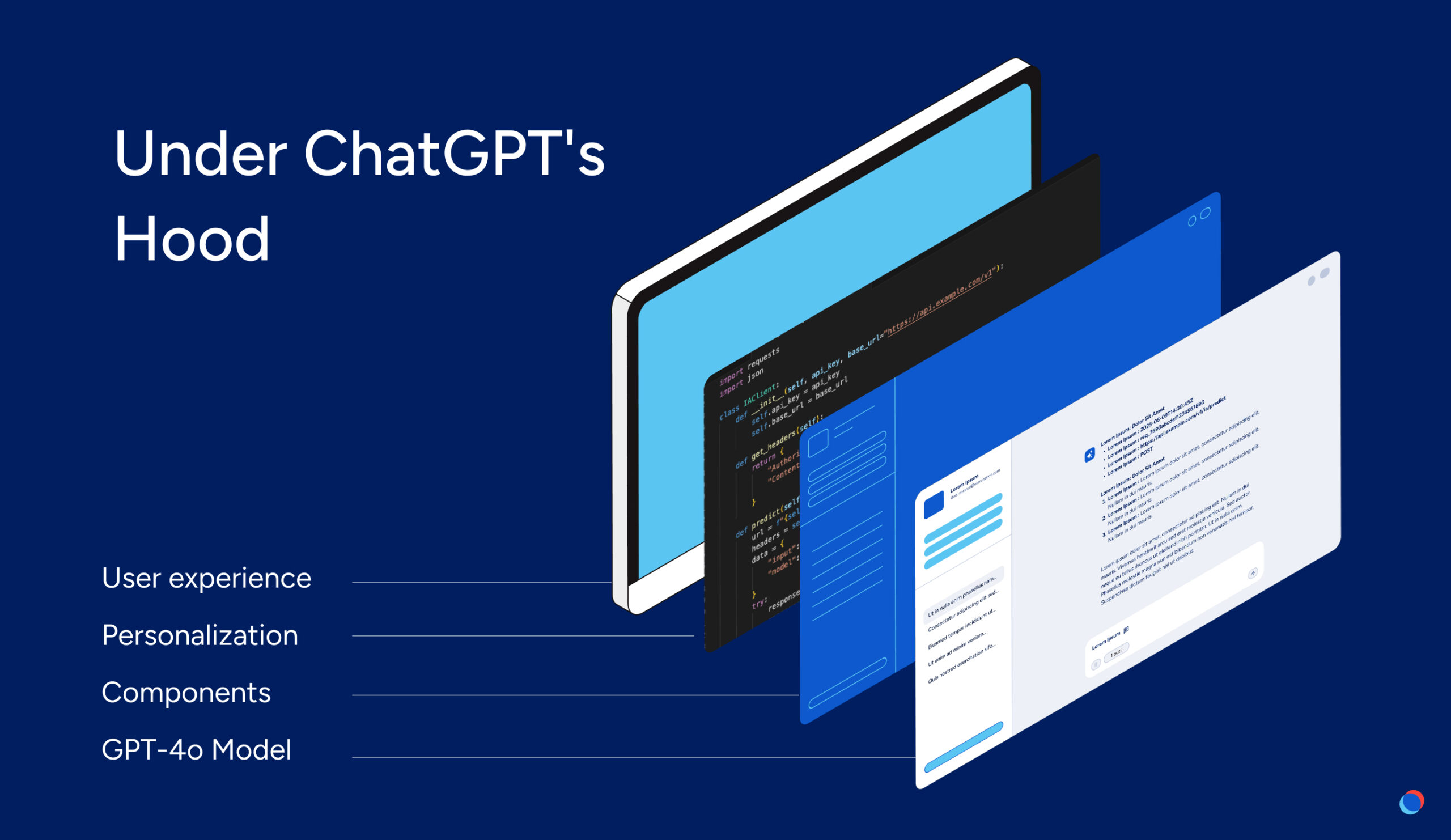

It would be unwise to treat ChatGPT as a plug-and-play service for your app. This AI tool is made up of several distinct components. ChatGPT is the product, and GPT-4o is its foundational language model, enhanced by preconfigured instructions defined by OpenAI, that are referred to as its Master Prompt.

Benefits of Integrating ChatGPT

Customizing Your Integration

Integrating a model like GPT-4o into your application means you’ll need to recreate part of its behavior. Failing to anticipate this distinction can lead to inefficient implementations, unexpected results, and disappointing user experiences.

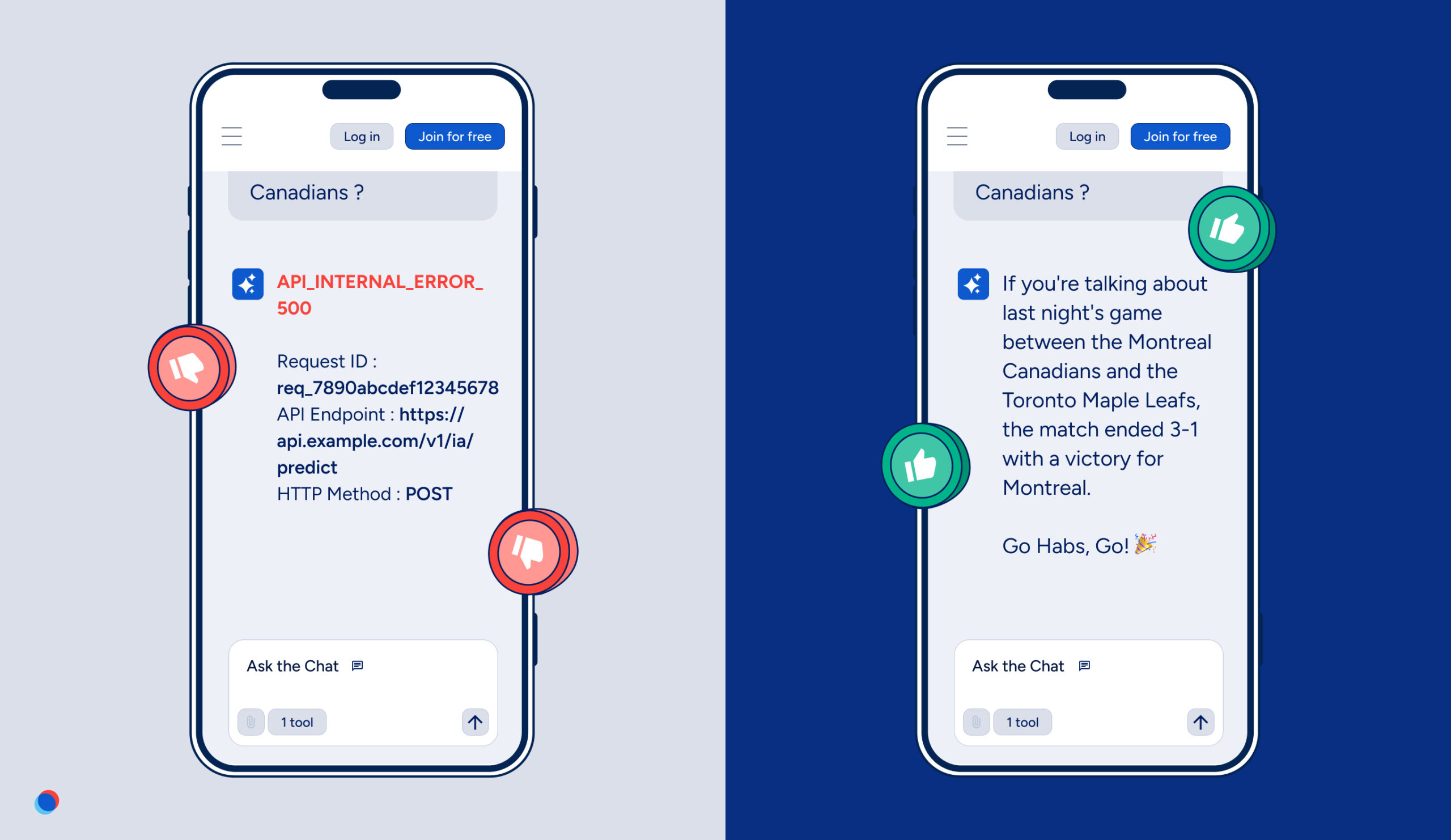

For example, let’s say a sports organization is configuring its club’s app. It expects GPT-4o to provide fans with accurate, consistently formatted responses about recent games. But the app can’t correctly answer “What was the final score of yesterday’s game?”

Why? Because unless the model has been configured to understand the current date and follow a set schedule, GPT-4o won’t know what “yesterday” refers to. With OpenAI’s ChatGPT interface, those configurations are already handled. This might seem like a minor issue, but it can create real friction for users, enough to drive them elsewhere for answers.

The filtering and moderation systems included in ChatGPT are also not automatically part of the raw GPT-4o API. A business deploying GPT-4o in a regulated sector—like finance, healthcare, or insurance—must define strict rules to prevent inappropriate answers, biases, or hallucinations.

And finally, to be truly useful to end users, the integrated tool must “speak their language.” For example, a legal tech company needs to ensure GPT-4o uses appropriate legal terminology and doesn’t reinterpret legislation or paraphrase in ways that stray from the legal framework.

In short, GPT-4o must be treated as a standalone product component, one that needs to be shaped carefully to meet the real needs of its users.

Key Steps for a Successful ChatGPT Integration

- Define your Master Prompt (instructions): This is a set of native instructions that guides how the model should respond. Your prompt should clearly define tone, expected response types, off-limit topics, and any required boundaries.

- Test and fine-tune interactions: Once the model is integrated, it’s critical to conduct thorough testing to ensure the responses align with user needs. Anticipate potential errors (ambiguities, confusion, hallucinations) and fine-tune the model accordingly.

- Design the experience: A high-performing AI model is more than just an API. It should be embedded within mechanisms for validation, moderation, and response control. For example, a user interface might include pre-selected response options to reduce errors and improve fluidity. Response length should also be configurable to manage costs tied to the tool’s use.

- Monitor performance: The model must be secure, monitored, and continuously optimized. Logging interactions helps identify recurring issues and refine the model and its settings over time.

Integrating a conversational AI model like GPT-4o presents a strong opportunity for differentiation, if approached with a product mindset. A well-configured AI can boost customer service efficiency, automate repetitive tasks, and enrich the user experience. But implementing it without tailored configurations or proper oversight can lead to counterproductive outcomes.

We’re only beginning to see the full potential of ChatGPT and conversational AI. For companies that integrate it thoughtfully and adapt it to their specific needs, this technology is a powerful lever for innovation and performance.